The news and Substack have been all atwitter with the impact of the massive loads that AI will bring. The green left has been clutching their pearls that this is the end of the world and we will cook in the juices of our own fat. But we really need to talk about the why, why does AI demand so much power? Well let’s bust that first myth right out of the box. AI is software, AI is software. Just let that sink in, it’s a program, a bunch of ones and zeros on a storage device. Until you plug it into some hardware, it doesn’t do anything. So buckle up buttercup, we are taking a drive on the technical side of data centers. I un-geeked as much as I could, but there is only so much you can do.

Early in my career I spent a fair amount of time in the data centers of the 1990s. Even then they were power hogs. Typical data center had UPS system, 4000-amp input, 3200-amp output, that’s a 20% loss from energy in to energy out. Some of that energy is reserved for battery charging, but UPS efficiency is reported to be 85 to 90%. Even at 90% that’s a 330 kW lost to heat in the UPS room. Bigger data centers may have more than one UPS. The UPS were connected to a string of submarine style batteries that would deliver full load energy for 10-20 minutes based on battery capacity. The electric rooms were typically on another floor sectioned off from the computer floor

.

The actual computer floor consisted of a raised floor partitioned into sections for cooling air flow. Computer Room Air Handlers (CRAH) were placed at regular intervals on the computer floor pulling warm air in the top and blowing chilled air under the floor where it would blow up the IBM mainframes placed at regular intervals across the computer floor. Cooling was achieved via chilled water. The newest IBM mainframes had a chilled water heat sink for the processors. Power was distributed by Power Distribution Unit (PDU) pedestals on the computer floor. In addition to the computers, some cooling equipment including water circulation pumps were powered by UPS

.

The mechanical cooling equipment was generally in a separate building or on the bottom floor. Cooling was via large centrifugal chillers generating a flow of chilled water. It was not unusual for the chillers to be powered by 4160-volt power due to the size of the equipment. Heat was disposed of via outdoor cooling towers. There was typically a chilled water storage tank to provide a continuous flow of cool water during a power interruption while the chiller(s) restarted.

The above description should give you a pretty clear picture that just under half the energy delivered to a data center is consumed by cooling. In addition, there are significant energy losses in the UPS system that is required to guarantee uninterrupted power to those IBM mainframes. What you should also see is in those 1990 data centers is that there were lots of single points of failure that could take the data center down. Keep that in mind.

So, if at this point you are doubting that those computers make that much heat, here is an experiment to try. On your home computer download this app; https://www.jam-software.com/heavyload . For our Mac users; https://cpu-stress-test.macupdate.com/ .

Once you have installed the software, set it up to stress test the CPU and GPU, and let it run for thirty minutes (make sure your laptop is plugged in). You will be quite surprised how warm your computer gets. Keep in mind you probably have a simple workstation with limited resources, not a powerful server, except for all you gamers, I know you! For you that don’t know, gaming computers are the cutting edge of power in a workstation. They generate a ton of heat, so they often have more fans than a hovercraft. They often have liquid cooling with internal radiators. Check this out if you’re curious; https://www.digitalstorm.com/aventum-x.asp .

Suffice it to say, we have come a very long way from the data centers of the early 1990s. My own experiences parallel the evolution. Just before moving into power operations, we completed a Distributed Control System (DCS) upgrade to our steam plant for Y2K. We moved from the old Bailey Net 90 purpose-built operator workstations to off the shelf PCs running interface software with the new Delta V DCS (which has also now been replaced). In Power Operations we were using tower-based SCADA servers in a brand specific control cabinet. Even back then the server room had it’s own redundant air conditioning. Fortunately, our control room was built on a raised computer floor, something that has paid for itself over and over. We transitioned from OSII Warp to Windows shortly after I arrived. Over the next 10 years we first transitioned from towers to rack mount servers for business, then transitioned the SCADA servers to rack mount and dumped the old proprietary cabinet. These were all individual physical computers. Next the City started adopting VM ware, and our office servers disappeared and became virtual computers running on a VM server in our rack. Then our SCADA vendor went out of business, so we had to find a new vendor. The new system required a fleet of servers, not just two redundant servers. Everything went VM from then on, with VM servers in our racks. All the added load forced us to upgrade the computer room HVAC. I am sure that load has continued to grow since I retired.

Some of you are saying, what is VM ware and virtualization? Let’s go back to data centers. Those IBM mainframes are all but a thing in the history books. They still have their place, but the market is very small. We can largely thank Google for the revolution in data center architecture that began around 2000. Google embraced a new concept called cluster computing. While it had been around awhile, it really hadn’t taken off. Google decided the mainframe model was just too expensive and confining for what they wanted to do. So, they figured out how to make racks of commodity grade computers work together to process big jobs. This method had high fault tolerance because if a single machine died, the process slowed but did not stop. As the load increased it was inexpensive to add more commodity-based servers to the cluster. This dramatically dropped the price of computing power and ended the IBM stranglehold. Google wrote an open-source white paper on their efforts that helped revolutionize the industry.

This was also when we saw cloud-based computing start to become available. AWS (Amazon Web Services), launched their cloud computing service along with Google Cloud Services and Microsoft Azure. The Software As a Service (SAS) model started to appear with companies offering web access to software suites to meet business needs. Cloud computing and virtualization are the same thing, just by different names.

Where does that put us now? It has become extremely popular to just rent some virtual space from a cloud computing provider and do away with the office-based servers. A virtual server is a program that does everything a hardware server would do, except it runs in a computer cloud and uses shared hardware resources. Generally, a slice of the combined hardware resources is allocated to a virtual server when it is created, including storage space. Offices that cannot risk the business interruption of an internet outage still have internal servers. Nearly everyone has gone to rack mount equipment, many have gone to dedicated sever rooms with their own HVAC. Some have even adopted hot isle/cold isle which we will talk about later. Many businesses now run their own VM servers with their own cloud environment because it is more cost effective than buying multiple dedicated servers. Multi-office businesses may run VM servers in two locations and share the cloud over a dedicated fiber connection. If one office goes down, the cloud servers in the other office take over. So, if your best friend's business is still using a tower in the hall closet, just look at him and say, “holy 1990s”.

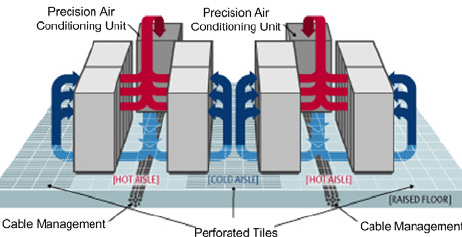

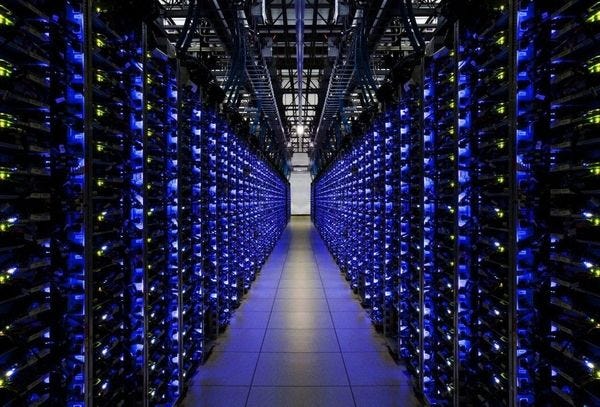

Circling back to data centers, I assume you have connected the dots that those big data centers don’t run mainframes anymore. They are still running the raised computer floor, as valuable now as it was in the beginning. The computer floor is now sectioned off into logical rooms formed by thin easy to remove walls. These rooms are known as pods.

Each pod is served by redundant PDUs and redundant CRAHs, possibly several. The redundant PDUs and the CRAHs are fed from separate redundant UPS systems. The UPS systems are fed from separate redundant switchboards. UPS systems now have a 5000-amp 480Y277 input and a 4000-amp output. Each pod is filled with computer racks, and each rack has a specific number of rack units, or “U”s. A U is 1.75 inches tall by 19 inches wide, depth can vary, usually 48-U each.

They are arranged in rows so the hot isle/cold isle can be constructed, rows are 10-20 racks long, with 2-6 rows per pod. They are configured with the “hot side” isolated from the “cold side” forming hot isles and/or cold isles.

Typically, the pod is either hot or cold, with an enclosed isle at the other temperature. The CRAH’s typically blow air chilled air under the computer floor, and it is released in front of the racks by perforated floor tiles. Hot air is drawn into the top of the CRAH. In a hot isle arrangement, the hot side is sealed and ducted to the CRAH intake. Hot isle arrangements are said to be more efficient. Hot side temp is around 95°F, cold side temp is typically around 68°F, however it is the hot side temp that is controlled, so the cold side can vary. New CRAHs will have pumps and distribution manifolds for direct water-cooled servers.

You can find versions of the hot isle/cold isle sever room in offices with high data demands. They typically do not have the raised computer floors, or PDUs. They have Computer Room Air Conditioners (CRAC) instead of the chilled water CRAH. The CRACs have an outdoor compressor/ condenser unit just like any other air conditioner. The CRACs are often “in row” cooling with cooling units between racks as needed, or “in rack” if it’s a single rack with a cooling unit mounted in the rack.

The rack mounted computers themselves have changed. They are no longer the simple commodity grade computers that Google pioneered. Now the push is for processing power per square foot of floor space. The intent is pack as much power as possible is each U of rack space. The image below is of a 6-U AI ready Dell server, link at the bottom of the image, well over $100K each, has six 240 volt 2800 watt power supplies, it should be able to run on three, that about 8.4 kW load per sever, and 2 to 3 kw worth of heat produced by each server. It has 11 cooling fans. It also has eight 700 watt GPU cards It has multiple network ports on redundant network cards. You can fit five of these in a standard 48-U rack, plus two or three 2-U network switches and/or a keyboard monitor drawer. For reference each one of these servers has more power than an IBM mainframe of the 1990s. To put the wattage in perspective your average household receptacle can deliver 1.5 kW continuously and 1.8 kW for short periods.

The other option that is getting some traction are servers like this Dell 2-U direct chilled water-cooled server below. One 2-U server is less powerful than one 6-U, server, but three, 2-U servers are more powerful than one 6-U server. That’s six processors instead of two, twelve GPUs instead of ten in the same rack space. Each unit has four double fan modules for a total of eight fans. Each server has four 2800-watt 240 volt power supplies, assuming 50% redundancy that’s 5.6 kW per server. With the same configuration as above that is 19 servers per rack. There are pros and cons to this approach; water cooling does let you increase your density. Water cooling does not eliminate all the air cooling; it just makes it more manageable.

Storage is no longer built into the server other than some storage for the operating system and support software. Hard drive space is in network connected hard drive RAID arrays Dell has 2-U drive racks called nodes with 24 SSD drives up to 31 TB per drive, per node, up to 737 TB per node, up to 252 nodes per cluster. That’s 186 PB of storage per cluster, which would fill just over ten 48-U racks. Each node will consume about 1kW

I have talked a lot about redundancy, everything has a backup, two sets of power supplies powered from completely different sources, at least two network connections routed through different switches and routers. RAID hard drives with similar connections, plus the self-healing RAID array of hard drives where you can hot-swap a bad hard drive and the drive structure will self-repair the data. Raid arrays are tolerant of X number of drive failures by design. Power supplies are hot-swap, fans are hot-swap, so servers and drives can be repaired while still online. There are redundant UPS systems and redundant utility feeds, backup generator systems segmented into redundant sections. The massive cooling system is the same way with redundant chiller systems, pumps and piping. The whole system is designed to avoid single points of failure causing a catastrophic shutdown.

I should point out these are very noisey places, and hearing protection is recomended. In the pods all the combined noise of all the fans, the UPS systems, the chillers, the dry transformers if they are used, are all very loud. Even a loaded switchboard makes a lot of noise.

So, we now know a data center is a big building stuffed with racks and racks of servers divided up into pods to use as much floor space as possible and still allow access. That the floors below are stuffed with UPS systems, batteries, switchgear and transformers to power all that equipment. That there is a massive cooling plant circulating chilled water through the building to collect all the heat all this equipment generates. Where does AI come in?

AI as I said in the beginning is just a program, but a massively resource hungry program. Just like you can’t run Windows 11 on your old Windows 3.1 computer, you can’t just load AI on a server in your garage. Where all this server and storage space might support a whole community of cloud computing customers, that same data center may be almost entirely consumed to run a powerful AI program. That’s why it needs so much power.

So next time you see a TV show or a movie where some nefarious group has setup a server farm in a basement or some barn on a farm. There are racks and racks of blinking lights, but not a single fan can be heard. The racks are just out in the open with no cooling at all. Yeah, that’s a movie. That same scenario holds true for the guy that has an AI program on his laptop taking over the world. Only in the movies folks.

I hope you enjoyed it, criticism and correction welcome, I am not a computer guy.

Nice work. You sure sound like a computer guy to me. I sometimes wonder what the contribution to global warming will be from the exhaust of all these data centers. It is a strange dichotomy. On the one hand we are sequestering CO2 by the megaton to control AGW. On the other we are pumping hot air on a metastatical scale into the atmosphere to bring on the advent of Skynet. Make it make sense.

Great job, very technical information described in a relatively easy way to understand.

I am an electrical systems engineer with a background in nuclear power plant engineering and operations so I get all the power demands. UPS and big ass lead acid batteries were my life for over 30 years. I now see data center power demand is comparable.

I’ve always been interested in computer stuff so thanks!